Fueling tension

The Maldivian presidential election concluded recently with Mohamed Muizzu winning 54 percent of the vote, making him the newly elected president of the Maldives. His campaign slogan, "India Out," has sparked extensive speculation in Indian media that he is pro-China, which could potentially harm India's strategic interests in South Asia and the Indian Ocean. India places great importance on its relationship with the Maldives. The Indian Ministry of External Affairs (MEA) described India's position in the Maldives as "pre-eminent," with relations extending to virtually all areas. However, the excessive focus on the newly elected Maldivian president reflects India's strategic self-doubt, as it has long emphasized the so-called Chinese threat in strategic terms.

When considering bilateral issues, India should do more self-reflection rather than fabricating a "Chinese threat" out of thin air. The "India Out" movement in the Maldives began as early as 2020, reflecting widespread dissatisfaction among Maldivians with India.

Maldivians believe that India's longstanding military presence in their country is a threat to their sovereignty. India maintains a military presence in the Maldives to operate the Dornier aircraft and two helicopters gifted to Male in 2020 and 2013, respectively. In November 2021, the Maldives National Defence Force (MNDF) had informed the parliamentary committee on security services that 75 Indian military personnel were stationed in the Maldives to operate the aircraft and choppers.

In addition to its military presence, India has assisted in building a new police academy in the Maldives, which houses the Maldives National College of Policing and Law Enforcement. Some believe this could interfere with the Maldives' independent law-enforcement authority.

The Maldives is also concerned about India's involvement in the development of Uthuru Thila Falhu, an island near the capital, Male, which is part of the two countries' defense cooperation. In 2021, the countries signed an agreement to develop and maintain a coast guard harbor and dockyard at Uthuru Thila Falhu. The island is a point to watch the incoming and outgoing traffic at the main port in Male, which has a highly strategic position.

While security cooperation between the Maldives and India has a long history, from the Maldives' perspective, such cooperation should be based on mutual equality, rather than actions that may infringe on national sovereignty. The strong call for "India Out" in the Maldives does not necessarily equate to "China In" as there has been no "China In" movement thus far. India views the Maldives as a strategic outpost in the Indian Ocean, but it is not necessary to always perceive China as an imaginary enemy. Such behavior only highlights a lack of strategic confidence.

China has long pursued cooperation with neighboring countries based on the principle of mutual benefit. China does not interfere in the domestic affairs and strategic autonomy of other countries. China's cooperation with the Maldives primarily focuses on improving livelihoods and social development, such as upgrading and constructing major international airports in the Maldives and building the China-Maldives Friendship Bridge.

India has maintained a "big brother" mentality in dealing with South Asian affairs, considering the region as its backyard. Whether India can treat South Asian countries, including Bangladesh, Nepal, the Maldives and Sri Lanka, as equals has been a question mark for a long time. On the surface, India follows a "neighborhood first" policy and plays a leading role in South Asian affairs. However, in reality, India leverages its geopolitical advantage and size in the region to compel South Asian countries to make choices that favor India on critical issues.

India is eagerly awaiting the "Indian Century." New Delhi has hosted numerous diplomatic events around the G20 summit this year, striving to present itself as a major global power with significant achievements.

Meanwhile, India has been assertive in handling its diplomatic relationships, such as cracking down on Chinese companies in India and imposing obstacles for Chinese citizens to obtain visas. However, the excessive attention on the Maldivian election result reflects India's self-doubt from another perspective. India needs to adjust its mind-set. Strategic self-doubt and excessive suspicion of China do not benefit India's aspirations for a major global role.

Beijing Daxing International Airport had its fourth anniversary on Monday, having been put into operation on September 25, 2019. It had notched more than 80 million passenger trips as of Sunday, the airport said.

The airport has had a total of 682,900 flight takeoffs and landings, a passenger throughput of 82.48 million trips, and a cargo throughput of 557,300 tons, the airport added.

Daxing airport restarted international passenger flights in January this year, and has made efforts to expand routes to Europe, North America, Japan and South Korea, while continuously expanding into the Southeast Asian and Oceanian markets.

As of the end of August, the airport has opened a total of 202 routes, covering 185 destinations in 18 countries and regions.

The airport conducted 7.94 million passenger trips in the two months' summer travel rush that ended on August 31, up 163 percent from the same period last year and the best performance in the summer travel rush since the airport opened.

The Civil Aviation Administration of China is accelerating the resumption of outbound travel.

The number of international passenger flights per week has recovered to 52 percent of the 2019 level, and the number of countries with passenger flight connectivity has recovered to nearly 90 percent of pre-pandemic levels, the administration said earlier this month.

Editor's Note:

On Tuesday, Bakyt Torobaev, deputy chairman of the Cabinet of Ministers of Kyrgyzstan, held a meeting with representatives of many Chinese businesses to boost trade and investment cooperation with China. Following the meeting, the Global Times reporter Wang Cong interviewed Torobaev. The following is an excerpt from the interview.

GT: What are the main goals of your trip to China? And what have been achieved so far?

Torobaev: At the invitation of the vice premier of China's State Council, we participated in the 2023 Global Sustainable Transport Forum. In my speech there, I said that we should further advance cooperation in the transport sector. Transportation is the same as the human blood circulation system. By developing transportation, we can increase trade cooperation. The directions of cooperation with Kyrgyzstan include the construction of railways, the construction of renewable energy power plants, the development of irrigation water technology, the development of education, etc. We hope to have closer cooperation with China in all fields.

GT: What are the top areas that have great potential for China-Kyrgyzstan cooperation?

Torobaev: Priority industries that need development are agriculture, high-tech fields, hydropower, and mineral resource development. We provide all conveniences for Chinese enterprises, and we hope that Chinese goods can be sold to other countries through Kyrgyzstan. Kyrgyzstan is a member of the Eurasian Economic Union and has obtained the Generalized Scheme of Preferences Plus (GSP+) with 6,200 products that can be exported to Europe. We hope to become a dry port for Chinese products exported to other countries.

Accompanied by a piercing whistle, the ship Ganghang Runyang 6002 was loaded with 96 TEUs, 2,000 tons of wheat and rice, and set off from Chunjiang Port of Jiangbei Modern Grain Logistics Park in Jining City, Shandong Province. Seven days later, this high-quality grains will arrive at Taicang Port along the Beijing-Hangzhou Grand Canal, and then be transported to Xintian Port in Wanzhou, Chongqing via the Yangtze River waterway, while the vessel will return to Jining with southern fertilizers and other goods. This marks the official opening of the first container route of "Jining-Wanzhou," and it is also another big step for Jining Port to develop westward. Jining, as the "Canal Capital" and the "Ridge of the Canal", based on the unique advantages of the golden waterway of the Beijing-Hangzhou Canal, and the staged completion of advanced infrastructure . On the east side of Longgong Port is a customs supervision site still under construction, which is expected to be completed in December this year. After it is officially put into use, Jining will become "an estuary in the doorway."

Longgong Port is strategically located at the intersection of Longgong River and Beijing-Hangzhou Grand Canal, with a linear distance of only 7 kilometers from Jining West Railway Station. It can realize the combined transportation of public iron and water. The construction and commissioning of Longgong Port is a major initiative in promoting energy transformation in Shandong. The service team of power supply company is at the port construction site. Jining Longgong Port is the first container port in the inland river in China to realize unmanned intelligent duty. By the end of 2023, a total of 10 berths will be built, turning the port into the largest inland river shipping center in the northern China. Jining Power Supply Company of State Grid has set up a service team to guide the construction of special railway lines and ensure that one project and one team can provide full life-cycle services. In order to ensure the normal use of electricity in port construction, the company intervened in advance, followed up the service, completed the construction task of Longgong power supply project, and upgraded the lines involved in the port, transforming 4.9 kilometers of 10 kV lines and 21.4 kilometers of 110 kV lines

A total of 826 million domestic passenger trips were made in China during the 8-day Mid-Autumn Festival & National Day holidays, a year-on-year increase of 71.3%. Holiday tourism generated 753.43 billion yuan ($104.68 billion), up 129.5% year-on-year, official data showed on Friday.

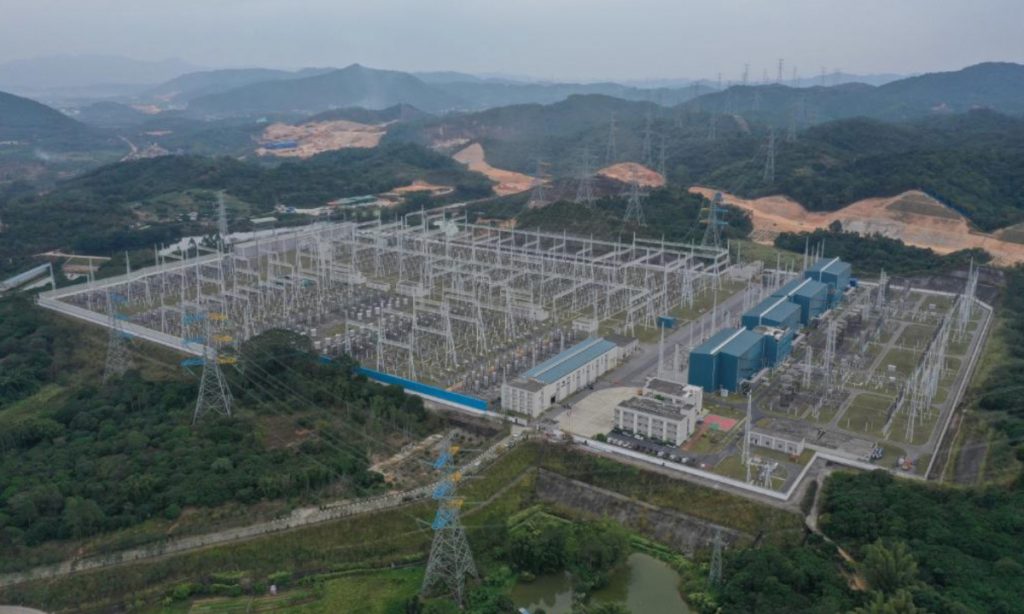

The first convertor transformer using China-made on-load tap changers have successfully been put into operation on Sunday at a crucial west-to-east power transmission project located in South China's Guangdong Province, according to a report published by xinhuanet.com.

Feng Dong, a senior executive at a subsidiary of the China Southern Power Grid, was quoted by the report as saying that China had completed the technological breakthrough from scratch in field of convertor transformer on-load tap changers, and has achieved full localization of components and other products' industrial chains.

This marks the fact that China has officially broken through the restrictions of this core technology in high-end electric equipment, Feng said.

Previously, long-distance, large capacity and high voltage direct current facilities are required for the transmission of electricity from western to eastern areas in China, and both terminals for transmitting and receiving power need to use the equipment of converter transformer that weighs more than 300 tons.

On-load tap changers of a convertor transformer are used to adjust the voltage, power load and current, similar to the function of a gearbox in a car.

Deng Jun, a senior technical expert at the aforementioned company, also said that an on-load tap changer of a convertor transformer has about 1,000 components, and is a highly complex and sophisticated piece of equipment.

According to the Xinhua report, this technology used to be grasped by only a few overseas companies, and when technical fault took place previously, Chinese companies had no choice but to replace the products with imported goods of the same model, whose ordering cycle took about three to four months, thus posing challenges to the safety of power operation in the country.

The report also cited a deputy general manager of the company as saying that the company has established a team in partnership with upper stream and downstream companies along the industrial chains.

After more than two years' of efforts, the team has broken through vacuum switch tubes and other technical bottlenecks to successfully research the large capacity convertor transformer on-load tap changer with rated capacity of 6,000 kilovolt-ampere, maximum voltage of 6,000 volt and maximum rated current of 1,300 ampere.

Using the domestically made on-load tap changers could save nearly 40 million yuan ($5.56 million) In the building of ultra-high voltage direct current power transmission projects, the manager said.

The discovery of a new species of gammaridea in the Irtysh River of Northwest China's Xinjiang Uygur Autonomous Region proves that Tianshan Mountains and the surrounding areas are the world's origin of cold-water organisms, according to a press conference held by the information office of the region on Tuesday.

The person in charge of the scientific research department announced the initial results of the third comprehensive scientific investigation in the Xinjiang region during the conference.

According to Zhang Yuanming, director of the Xinjiang Institute of Ecology and Geography under the Chinese Academy of Sciences and head of the leading unit of the expedition, the third comprehensive scientific expedition in Xinjiang, conducted in 2022, has yielded significant achievements.

In the wild fruit forest of Tianshan Mountains, the expedition discovered two new species of moss, 39 new species of parasitic natural enemies, and a new species of gammaridea.

New discoveries have been made in the study of the formation and evolution of the Taklimakan Desert, and a new understanding has been proposed that the Taklimakan Desert may have been formed 300,000 years ago.

Furthermore, the expedition clarified the superimposed effects of wind dynamics, underlying surface, and sand sources on aeolian sand geomorphology, and confirmed that the Tarim Basin dust can affect North China and the Qinghai-Xizang Plateau.

In addition, researchers participating in the expedition built 26 automatic monitoring stations for ecosystems in no man's land by integrating drones, satellites and the Internet of Things.

The databases of the first and second comprehensive scientific expeditions in Xinjiang were rebuilt, and the data sharing service for scientific expeditions in Xinjiang was established.

Moreover, the expedition's researchers determined the overall water flow status of many important rivers and provided decision-making suggestions for regional water resource development.

According to open date, the third comprehensive scientific expedition to Xinjiang was officially launched in December 2021. The scientific research was designed to focus on the green and sustainable development of Xinjiang, get a comprehensive picture of Xinjiang's resources and environment, scientifically evaluate the carrying capacity of Xinjiang's resources, propose strategies and road maps for Xinjiang's future ecological construction and green development and cultivate a strategic scientific team rooted in Xinjiang and engaged in resources and environment research in arid areas.

From space robots to greenhouse gas remote sensing, from placing fish on the space station's dinner table to mutagenesis of rose essential extraction… The first International Space Science and Scientific Payload Competition kicked off in Foshan, South China's Guangdong Province on Thursday, attracting youths from across the world to vie for a "ticket" to participate in the China Space Station and the International Space Station (ISS).

Despite an ever complex world where tensions are rising among global players, space cooperation and people-to-people exchanges remain robust and vibrant, bringing a channel for connection that transcends differences and promotes the building of a shared future for mankind, students and experts from the US and Europe told the Global Times at the event.

The competition is the first international aerospace competition in China aimed at gathering and nurturing outstanding global talents and projects in space science and payload technology. The winning projects will be recommended as candidates for flights to the China Space Station and the ISS, the Global Times learned on Thursday.

The competition is jointly initiated by the Chinese Institute of Electronics, Beijing Institute of Technology, the International Academy of Astronautics, Chinese Society of Astronautics, and China Space Foundation.

Themed "A Shared Space for a Better Future," it is committed to promoting significant scientific discoveries and innovative technological breakthroughs in the aerospace field, driving the civil use of aerospace technology and promoting a sharing mechanism for innovative achievements to benefit all humanity.

Olivier Contant, the French-American Executive Director of the International Academy of Astronautics (IAA), emphasized the academy's longstanding commitment to promoting peaceful collaboration among countries during an exclusive interview with the Global Times. "The IAA has been dedicated for over 60 years to enabling all nations to participate in space programs. Our mission is to foster global unity through research, conferences, technologies, and collaboration, all focused on the peaceful use of space. In the scientific world, you don't have these boundaries, politics."

"Competition always happens, but that's normal. Our focus remains on promoting peaceful collaboration for the benefit of mankind regardless what's happening. One way for the Academy to do it is by conducting more than 100 space studies with world class experts based on international consensus." he said.

The competition has attracted student groups from more than 30 countries including Spain, Italy, Egypt, Russia, Pakistan, Argentina and Mexico. There are 116 teams - 81 from China and 35 from abroad. Thirteen high school groups were invited to participate as well.

úrsula Andrea Martinez álvarez and Gigor Dan-Cristian, PhD students in aerospace engineering from the Technical University of Madrid, Spain, told the Global Times that their project, thermocapillary-based control of a free surface in microgravity, has been selected to enter the final round of the competition.

"China's advance in space has been real fast, and it would be amazing to internationally cooperate among different countries and also use the Chinese space station as a platform to perform experiments from people worldwide," úrsula told the Global Times.

Speaking of recent tensions between China and the US in space, she said that "historically as space advancement is such a difficult matter, it has always needed collaboration between nations, and history has demonstrated that we have been able to surpass the political tensions for the good of science. I believe that this is actually a channel to show that we can cooperate and understand each other."

Other projects from the college student teams include an intelligent snake-shaped space robot presented by the Beijing Institute of Technology. Equipped with flexible pillars, it is lightweight and compact, providing technical support for internal structural monitoring and surgical functions that involve human-robot collaboration.

A team from Islamabad, Pakistan presented research on the impact of high speed and high altitude on the positioning of the BDS GNSS receiver, while Samara National Research University from Russia presented a study on antibiotic resistance in intestinal bacteria in a space environment.

High school students also impressed the audiences with a number of brilliant ideas. A team from Beijing presented the idea of space-induced mutagenesis breeding for roses to increase their oil production, as rose essential oil is one of the most expensive oils in the world.

The team from Foshan's Dali High School brought researches on the cultivation of multi-generation mudskippers in the space station. The experiment aims to study amphibious fish that can be cultivated in the space station for long periods. The fish is a tasty and nutritious dish to enrich astronauts' menus on their dinner table.

Li Shiyi, a freshman at Dali High School, told the Global Times that the project was inspired by the experiments taught by Shenzhou-13 taikonaut Wang Yaping while in orbit.

"During the Tiangong classroom, Wang talked about experiments involving fish and rice cultivation, and we extended it to the mudskipper. We noticed that the food they ate was always from vacuum-sealed bags brought from the ground, which may not be very fresh. So I hope that by conducting this experiment, they can eventually enjoy a hot bowl of fresh fish soup in space one day," Li said.

Li said that she wants to become a taikonaut like Wang after she grows up. "My wish is for China's space station to develop faster and better. I also hope to have more exchanges with countries like the US that have more advanced space technology. By doing so, we can use our mature and advanced technology to help countries that have relatively lagged behind in space development, making the world a better place."

At the opening ceremony of the finals, Zhang Feng, Chairman of the Chinese Institute of Electronics, said they will continue to utilize such conference platform to comprehensively showcase cutting-edge technologies in the field of life electronics, explore future visions, and promote extensive collaboration in the field of life electronics.

"Young talents are where the national innovation vitality and the hope for technological development lie. In the process of continuously creating new history in China's aerospace industry, a large number of young aerospace professionals have taken on major responsibilities, showcasing the spirit of Chinese youth in the new era with their radiance and vitality," Yu Miao, Director of the International Cooperation Center of China Aerospace, said while addressing the opening ceremony of the event.

Beijing's local government unveiled a new action plan on Wednesday for industrial innovation and development in the robot industry from 2023-2025, aiming to boost self-development across the supply chain in key technology areas.

The plan comes as a follow-up to government efforts to take an active approach in the preparation for future industry development in areas like robots and artificial intelligence (AI), experts said.

The plan aims to ramp up the industry layout of humanoid robots and support enterprises and universities in developing key robot components. The capital also aims to support the establishment of an innovation center for humanoid robots.

Specific goals are included in the plan. By 2025, Beijing's innovation capability in the robot industry will be greatly improved and 100 types of high-tech and high value-added robot products will be cultivated, along with 100 application scenarios.

The city's robot industry is expected to generate revenue of more than 30 billion yuan by 2025.

The application scenarios for humanoid robots are wide-ranging, with potential demand in industries ranging from the services sector to municipal firefighting, Xiang Ligang, a veteran technology analyst, told the Global Times on Wednesday. "We need to proactively plan and prepare for the future in order to be in the front league of the world," Xiang noted.

Talking about the importance of developing humanoid robots and AI, Xiang said that it requires high levels of technological integration and represents the core of technological development.

For example, the robots need to be able to move and maintain balance, and they should be able to sense and react to the surrounding environment, while demonstrating certain levels of artificial intelligence and understanding.

China has been an active player in technology development in this area, which gives the country an advantage for reaching its ambitious goals.

In January 2022, fifteen government departments including the Ministry of Industry and Information Technology and the National Development and Reform Commission rolled out a plan for China to become a global leader in robot technology innovation by 2025.

China's development in the related industries has been conspicuous in terms of expansion and technology advancement. For example, from 2016 to 2020, the scale of the country's robot industry grew at an average annual compound rate of about 15 percent.

Breakthroughs in key technologies and components such as precision reducers and intelligent controllers have also accelerated, and innovations and application scenarios are constantly emerging.

"We have already made significant progress domestically in terms of technological foundations. As the government takes the lead, providing funding and policy support, we can see that opportunities in the new industries - robots and AI - are about to emerge," Xiang said.

"Surely, this will require long-term investment, and it may take years to see significant results, but in order to take the lead, we must start preparing now," the expert said.